Book Chapter 41 Cyberinfrastructure

Chapter 41: Cyberinfrastructure and Community Environmental Modeling

J.P.M. Syvitski, Scott D. Peckham and Eric W.H. Hutton Community Surface Dynamics Modeling System Institute of Arctic and Alpine Research University of Colorado — Boulder Boulder, CO 80309-0545

Olaf David Dept. of Civil and Environmental Engineering & Dept. of Computer Science, Colorado State University, Fort Collins, CO 80523

Jonathan L. Goodall Department of Civil and Environmental Engineering University of South Carolina Columbia, SC 29208

Cecelia Deluca Cooperative Institute for Research in the Environmental Sciences University of Colorado — Boulder Boulder, CO 80309 Gerhard Theurich Science Applications International Corporation

Introduction

The development of cyber-infrastructure in the field of Environmental Fluid Dynamics has been long, and tortuous. With the proliferation of acceptable core university courses in the sciences and engineering, in the 1960’s and 70’s, and the reduction of core credits needed for graduation, a knowledge gulf has been growing between computational scientists and software engineers (Wilson and Lumsdaine 2008[1]). Yet Computational Science and Engineering (CSE) has been growing rapidly at most research universities, particularly in the 1990’s and 2000’s, penetrating to some extent most of science and engineering disciplines (McMail 2008[2]). Large codes by their nature involved more than one environmental domain, for example wind-driven currents and wave dynamics in oceanography, or channelized flow overland flow and groundwater flow in hydrology. As these codes grew in source lines, so did diversity of experts needed in their development, and thus the birth of community modeling. Models require readily available data and data systems, and community-modeling efforts both supported and directed new field campaigns and observational systems (e.g. satellites, ships, planes, telecommunication). Inevitably when the codes reached a certain size (say 50,000 lines of code), the codes became modeling frameworks. Too large for individuals to understand the rigid framework, developers would pass on their process modules to be implemented by a master(s) of the code. As these large framework-models were to be linked to other framework-models, such as between an ocean general circulation model (GCM) and an atmospheric GCM, they were so rigid that flux couplers unique to the domains being coupled were required. Great attention to the details to grid meshing, time stepping, computational precision, and data I/O made these couplers rigid themselves. Meanwhile the field of software engineering had been changing rapidly, developing new standards for data exchange, model interfaces, and ways to employ varied computational platforms (laptop, server, high performance computing clusters, distributed and cloud computing). This chapter is written by a combination of software engineers and scientists behind four representative cyberinfrastructure projects involved in the field of environmental community modeling. Each project faced different cyber requirements, different but overlapping scientific communities, different funding histories, and to some extent different customers. The projects each support one another, a necessity given limited funds.

Principles

Concepts like Direct Numerical Simulation (DNS), Large-Eddy Simulation (LES), and Reynolds Averaged Numerical Simulation (RANS), as defined in other chapters of this textbook, are familiar to most working in the field of CSE. But CSE experts are less familiar with the jargon of cyberinfrastucture, even though they underpin the third pillar of CSE. Below we provide a window into the key principles behind modern community modeling and cyberinfrascture efforts (see section 1.3), including Frameworks, Architectures, Component-based Modeling, Interface Standards, Drivers, Ports and Protocols.

Frameworks and Architectures

Driving forces for framework adoption are: (1) saving time and reducing costs, (2) providing quality assurance and control, (3) re-purposing model solutions for new business needs, (4) ensuring consistency and traceability of model results, and (5) mastering computing scalability to solve complex modeling problems. The model developer should be able to efficiently develop and deliver a simulation model, and look forward to increase modeling productivity.

Environmental modeling frameworks support developing and deploying environmental simulation models. Functions provided may include support for coupling of models into functional units (e.g. components, classes, or modules), component interaction and communication, time stepping, regriding of arrays, up or downscaling of spatial data, multiprocessor support, and cross language interoperability. A framework may also provide a uniform method of trapping or handling exceptions (i.e. errors).

An Architecture is the set of standards that allow components to be combined and integrated for enhanced functionality, for instance on high-performance computing systems. The standards are necessary for the interoperation of components developed in the context of different frameworks. Software components that adhere to these standards can be ported with relative ease to another compliant framework.

Modularity, Components and Component-based Modeling

Modularity applies to the development of individual software modules, often with a standardized interface to allow different modules communicate. Typically, partitioning a system into modules helps minimize coupling and lead to 'easier-to-maintain' code.

Components are functional units that once implemented in a particular framework are reusable in other models within the same framework, with little migration effort. One advantage of using a modeling framework is that pre-existing components can be reused to facilitate model development. Component-based modeling brings about the advantages of “plug and play” technology. Component programming builds upon the fundamental concepts of object-oriented programming, with the main difference being the introduction or presence of a framework. Components are generally implemented as classes in an object-oriented language, and are essentially "black boxes" that encapsulate some useful bit of functionality. They are often present in the form of a library file, that is, a shared object (.so file, Unix) or a dynamically linked library (.dll file, Windows) or (.dylib file, Macintosh). A framework then provides for an environment in which components can be linked together to form applications. One thing that typically distinguishes components from ordinary subroutines, software modules or classes, is that they can communicate with other components written in a different programming language.

Interfaces and Drivers

Components typically provide one or more interfaces by which a caller can access their functionality. The word "interface" refers to a boundary between two things and what happens at the boundary. An interface can be between a human and a computer program, such as a command-line interface (CLI), or a graphical user interface (GUI). In the context of plug-and-play components, the word interface refers to a named set of member functions (also called methods), each defined completely with regard to argument types and return types but without any actual implementation. An interface is a user-defined type, similar to an abstract class, with member function "templates" but no data members.

If a component does "have" a given interface, then it is said to expose or implement that interface, meaning that it contains an actual implementation for each of those member functions. It is perfectly fine if the component has additional member functions beyond the ones that comprise a particular interface. Because of this, it is possible and often useful for a single component to expose multiple, different interfaces. This allows it to be used in a greater variety of settings.

Most surface dynamics models advance values forward in time on a grid or mesh and have a similar internal structure. This structure consists of lines of code before the beginning of a time loop (the initialize step), lines of code inside the time loop (the run step) and finish with additional lines after the end of the time loop (the finalize step). Virtually all component-based modeling efforts (e.g. ESMF, OpenMI, OMS, CSDMS) recognize the utility of moving these lines of code into three separate functions, with names such as Initialize, Run and Finalize. These three functions constitute a simple model-component interface that we refer to as an ‘IRF interface’. Such an interface provides a calling program with fine-grained access to a model's capabilities and the ability to control its overall time stepping so that it can be used in a larger application. The calling program "steers" a set of components and so is referred to as a driver.

Provides Ports and Uses Ports

A meaningful linkage of components often requires more than just the IRF functions; most linkages also require data exchange. Therefore, a model's interface must also describe functions that access data that it wishes to provide (getter functions) as well as methods that allow other components to change it's data (setter functions). With getter and setter interface functions, connected components can query generated data as well as alter data from the other model.

Within a general component framework, a component will have two types of connections with other models. These connections are made through ports that come in two varieties. These ports are called ‘provides ports’ and ‘uses ports’ within a Common Component Architecture framework (Armstrong et al. 1999[3]). The first provides an interface to the component’s own functionality (and data). The second specifies a set of capabilities (or data) that the component requires from another component to complete its task. A provides-port presents to other components an interface that describes its functionality. For instance, a provides-port that exposes an IRF interface, allows another component to gain access to its initialize, run, and finalize steps. Any interface can be exposed through a port, but it can only be connected to another port with a similar interface.

The uses-port of a component presents functionality that it lacks itself and therefore requires from another component. Any component that provides the required functionality is able to connect to it. Thus, the component is not able to function until it is connected to a component that has the required functionality. This allows a model developer to create a new model that uses the functionality of another component without having to know the details of that component or to even have that component exist at all.

This style of plug-and-play component programming benefits both model programmers and users. Within a framework model developers are able to create models within their areas own of expertise and rely on experts outside their field to fill in the gaps. Models that provide the same functionality can easily be compared to one another simply by unplugging one model and plugging in another, similar model. In this way users can easily conduct model comparisons and more simply build larger models from a series of components to solve new problems.

Model Protocols

The procedures or system of rules governing contributed community software are referred to as its ‘protocols’, and provide both technical and social recommendations to model developers. The protocols for software contribution to the CSDMS Model Repository, for example, are:

- Software should hold an open-source license [e.g. GPL2 compatible; OSI approved].

- Software should be widely available to the community of scientists [e.g. CSDMS Model Repository; Computers & Geosciences Repository].

- Software should receive some level of vetting [e.g. a colleague; a CSDMS Working Group]. The software should be determined to do what it says it does.

- Software should be written in an open-source language (C, C++, any Fortran, Java, Python), or have a pathway for use in an open-source environment [e.g. IDL & Matlab code can be made compatible].

- Code should be written or refactored to be a component with an interface (IRF), with specific I/O exchange items (getters, setters, grid information) documented.

- Code should be accompanied with a metadata information, e.g. the CSDMS model questionnaire, and test files (input files to run the model; output files to verify the model run).

- Code should be clean and documented, and annotated using keywords within comment blocks to provide basic metadata for the model and its variables.

Methods & Applications

Below we provide details about four community projects that can be considered representative of the large suite of community cyberinfrastructure efforts. The CUAHSI HIS offers a service-oriented architecture, unique interface specification, data-exchange standard, and data model, with successful penetration its hydrological offerings into academic and government research centers. OMS is a non-invasive modeling framework employing metadata annotations that employs a large library of legacy (Fortran) code developed originally by the U.S. Department of Agriculture, while being supported by modern computational platforms. ESMF is a computational efficient modeling framework for mostly scalable Fortran operational codes (NOAA, NASA, NCAR, DoD), offering standards for gridded components and coupler components. CSDMS a complete modeling environment involving a model repository of numerous research-grade code, offering model coupling; language interoperability; unstructured, structured and object-oriented code; framework interoperability; structured and unstructured grids; by employing the Common Component Architecture and Open Modeling Interface standards, with augmented services and tools.

Hydrological Information System

The Consortium of Universities for the Advancement of Hydrologic Science, Inc. (CUAHSI) Hydrologic Information System (HIS) is a collection of standards and software components designed to enhance access to water data (Maidment 2008[4]). A multi-university team (U. Texas at Austin, Utah State U., San Diego Supercomputer Center, U. South Carolina, Drexel U., and Idaho State U.) supports the development and design of CUAHSI HIS.

CUAHSI HIS follows a Service-Oriented Architecture (SOA) approach for organizing complex, geographically distributed software systems. In SOA design, system components are loosely coupled and communicate through web services that have standardized interfaces with clearly defined protocols and data exchange specifications (Erl 2005[5]). An important innovation of CUAHSI HIS is the web service standards for communicating water data between software components within a distributed computing environment. These web service standard includes an interface specification termed WaterOneFlow that defines how a client application is to request data using the web service, and a data exchange standard termed the Water Markup Language (WaterML) that specifies how water data is encoded when transferred from the web service to client applications. Both WaterOneFlow and WaterML standards extend core web service standards established by the World Wide Web Consortium, making these general standards more specific and useful to the hydrologic community. Although an end user may never see the WaterOneFlow and WaterML standards, these core design specifications are a critical innovation that pervades the entire HIS cyberinfrastructure and allow the distributed software components to function as an integrated system.

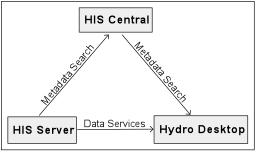

A set of software components was also created to support the publishing, discovery, and access to water data. Millions of water data records are indexed within the HIS, and the database is growing rapidly. The software components are organized into three applications: HIS Server, HIS Central, and HydroDesktop (Figure 1.1). HIS Server is designed as a tool to support scientific investigators in storing, publishing, and analyzing observational data (Tarboton et al. 2009[6]). The core of the HIS Server is the Observation Data Model (ODM), a database schema for representing point observational data (Horsburgh et al. 2008[7]). Investigators can use utility software such as the ODM Data Loader to populate an ODM relational database with their own field or sensor data. Once the data has been loaded into an ODM database, software in the HIS Server stack makes that data accessible to client applications using the HIS web service standards. The software automates the process of handling data requests, querying and extracting data from the database, and translating the requested data into the WaterML schema before returning it to the requesting client application.

HIS Central provides the ability to index, integrate, and search for data stored within multiple HIS Servers and other databases exposed using the CUAHSI HIS web service standard (Tarboton et al. 2009[6]). It consists of a central registry of CUAHSI HIS web services, a metadata catalog harvested from registered web services, and semantic mediation and search capabilities that enable discovery of information distributed across multiple web services. Once a HIS Server is registered in HIS Central, the software uses the HIS web services to extract and store metadata associated with the observational data stored within that server. By design, only the metadata associated with observations (e.g., the site locations, the variable names, measurement units, etc.) are stored within HIS Central to support search and discovery applications; the data values themselves are not stored within HIS Central in order to reduce data storage volumes. Semantic mediation is conducted by requiring data providers to perform a semantic tagging operation where variable names in their own dataset are related to variable concepts within the HIS ontology. Software tools such as the Hydrotagger support the user in the semantic mediation process. Once the dataset has been related to the HIS ontology, it becomes searchable through various client applications that access HIS data.

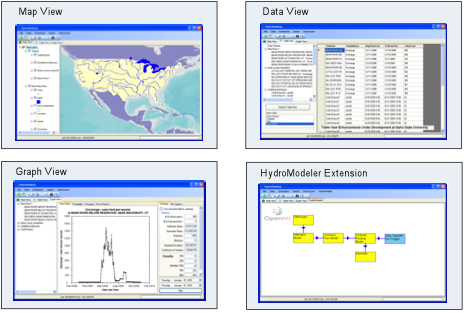

HydroDesktop (formerly HIS Desktop) is the primary client application in the CUAHSI HIS software suite, and allows users to easily search, download, and visualize datasets indexed within HIS Central (Figure 1.2). HydroDesktop is a client application with core functionality for data management and visualization, but also extensible through the development of third-party software extensions (i.e., HydroDesktop plug-ins). HydroDesktop provides an ability to search the HIS Central metadata catalog for data and to download data of interest directly from the HIS Server where that data resides. Downloaded data can be visualized in a map view that leverages Geographic Information System (GIS) technology, a graph view designed specifically for visualizing time series data, and a data view that provides a user interface to the raw data stored in the underlying database. An example of a plug-in that extends the core HydroDekstop functionality is an integrated modeling environment called HydroModeler that adopts the Open Modeling Interface (OpenMI) standard for linking model components into workflows. The end goal of the HydroDesktop application is to provide an easy to use and easy to extend software tool through which scientists, students, and practitioners can leverage the growing HIS data archive.

The CUAHSI HIS addresses the problem of data gathering and integration common in hydrologic analysis and modeling. Because hydrologic models, particularly those at the catchment and watershed-scale, require a significant amount of data to digitally describe the natural environment (e.g., observations, terrain, soil, vegetation, etc.), hydrologists devote a significant amount of time to locating, reformatting, and integrating these data to support modeling and analysis activities. Even simple analyses that require cross-site integration of data are hindered by inconsistent data formats and data access protocols used in the hydrology community. New remote and in situ sensing technologies promise to amplify this challenge with exponential increases in scientific data generated over the coming decades. For this reason, standardization of how water data is digitally communicated must not only focus on allowing humans to more easily find and leverage databases, but also on teaching machines to access and effectively synthesize data across sites, organizations, and disciplinary boundaries.

CUAHSI HIS is designed to aid research investigators in their efforts to advance hydrologic science and education in the United States. Many other federal datasets are registered in HIS Central and, in doing so the CUAHSI HIS is providing integrated access to national-scale datasets describing streamflow, groundwater, water quality, and climate conditions. Some U.S. State government agencies have also adopted CUAHSI HIS approaches for integrating water data to support water management mandates. The CUAHSI HIS is also being implemented outside of the United States, for example in Australia, to better account for water resources given severe recent drought conditions. While these government-collected datasets can be of great use to hydrologic researchers and educators, the integration of the information also aids in state and federal efforts to achieve interagency integration of water data.

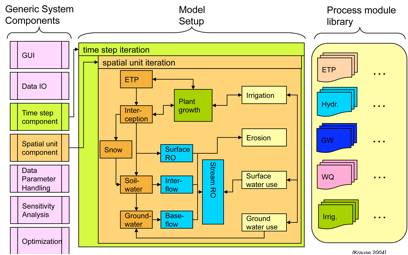

Object Modeling System

The Object Modeling System (OMS) is an integrated environmental modeling framework. There are four foundations identified for OMS3 (Figure 1.3): modeling resources, the system knowledge base, development tools, and the modeling products. OMS3 consists of an internal knowledge base and development tools for model and simulation creations. The system derives information out of various modeling resources, such as data bases, services, version control systems, or other repositories, transforms it into a framework knowledge bases that the OMS3 development tools use to create modeling products. Products include model applications; simulations that support calibration, optimization, and parameter sensitivity analysis; output analyses; audit trails; and documentation. Implementing OMS3 requires a commitment to a structured model and simulation development process, such as the use of a version control system for model source code management, or a simulation run database to store audit trails. Such features are important for institutionalized implementation of OMS3, however a single modeler may not be required to adhere to it.

OMS3 adheres to the notion of objects as the fundamental building blocks for a model and to the principles of component based software engineering for the model development process. The models within the OMS3 Framework are objects or components. However, the design of OMS is unique in that it is considered non-invasive and sees models and components as plain objects with metadata by means of annotations. Modelers do not have to learn an extensive object-oriented Application Programming Interface (API), nor do they have to comprehend complex design patterns. Instead, OMS3 plain objects are perfect fits as modeling components as long as they communicate the location of their (i) processing logic, and (ii) data flow. Annotations do this in a descriptive, non-invasive way.

Most agro-environmental modelers, at least early in the development life cycle, are natural resource scientists with experience in programming (often self-taught), but not software architecture and design. Most modeling projects do not have the luxury employing an experienced software engineer or computer scientist. Software engineers understand and apply complex design patterns, UML diagrams, advanced object-oriented techniques such as parameterized types, or higher level data structures and composition. A hydrologist or other natural resource scientist may lack these skills. The targeted use of object-oriented analysis and design principles for modeling could be productive for a specific model having limited expectation for reuse and extensibility. However, for a framework, the extensive use of object-oriented features for models puts an undesirable burden on the scientist.

The agro-environmental modeling community maintains a large number of legacy models. Some methods and equations still in use were developed as long as 60 years ago. What has changed and will continue to change is the infrastructure around them that delivers the output from these models. A lightweight framework adjusts to an existing design as opposed to define its own specification or API. The learning curve small, as there is no complex API to learn or new data types to manage. This has some very practical implications for a modeler, since there is no major paradigm shift in using existing modeling code and libraries.

Since OMS3 is a non-invasive modeling framework, the modeler does not need an extensive knowledge of object-oriented principles to make the model-framework integration happen. Creating a modeling object is very easy. There are no interfaces to implement, no classes to extend and polymorphic methods to overwrite, no framework-specific data types to replace common native language data types1 etc. OMS3 uses metadata by means of annotations to specify and describe "points of interest" for existing data fields and methods for the framework.

There are several operational and research focused OMS3 model application to date. The National Water and Climate Center (NWCC) of the US Department of Agriculture (USDA) Natural Resources Conservation Service (NRCS) is moving to augment seasonal, regression-equation based water supply forecasts with shorter-term forecasts based on the use of distributed-parameter, physical process hydrologic models and an Ensemble Streamflow Prediction (ESP) methodology. The primary model base is built using OMS3 and the PRMS hydrological watershed Model. The model collection will be used to assist in addressing a wide variety of water-user requests for more information on the volume and timing of water availability, and improving forecast accuracy. This effort involves developing and implementing a modeling framework and associated models and tools, to provide timely forecasts for use by the agricultural community in the western U.S. where snowmelt is a major source of water supply.

At the USDA-ARS Agricultural Systems Research Unit, an OMS3-based, component-oriented hydrological system for fully distributed simulation of water quantity and quality in large watersheds is being developed. The AgES-WS (AgroEcosytem Watershed) model was evaluated on the Cedar Creek Watershed (CCW) in northeastern Indiana, USA, one of 14 benchmark watersheds in the USDA-ARS Conservation Effects Assessment Project (CEAP) watershed assessment study. Model performance for daily, monthly, and annual stream flow response using non-calibrated and manually-calibrated parameter sets was assessed using Nash-Sutcliffe model efficiency (ENS), coefficient of determination (R2), Root Mean Square Error (RMSE), relative absolute error (RAE), and percent bias (PBIAS) model evaluation coefficients. The results show that the prototype AgES-WS watershed model was able to reproduce the hydrological dynamics with sufficient quality, and more importantly should serve as a foundation on which to build a more comprehensive model to better quantify water quantity and quality at the watershed scale. The study is unique in that it represents the first attempt to develop and apply a complex natural resource system model under the OMS3.

OMS3 represents an easy to use, transparent and scalable implementation of an environmental modeling framework. In OMS3, the internal complexity of the framework itself was vastly reduced while allowing models to implicitly scale from multi-core desktops to cluster to clouds, without burdening the model developer with complex technical details.

Earth System Modeling Framework

The Earth System Modeling Framework is open source software for building model components, and coupling them together to form applications. ESMF was motivated by the desire to increase collaboration and capabilities, and reduce cost and effort, by sharing codes. It was initiated in 2002 under NASA funding and has evolved to multi-agency support and management. The project is distinguished by its strong emphasis on community governance and distributed development, and by a diverse customer base that includes modeling groups from universities, major U.S. research centers, the National Weather Service, the Department of Defense, and NASA. Some of the major codes that have implemented ESMF coupling interfaces include the Community Climate System Model (CCSM4), the NOAA National Environmental Modeling System (NEMS), the NASA GEOS-5 atmospheric general circulation model, the Weather Research and Forecast (WRF) model, and the Coupled Ocean Atmosphere Mesoscale Prediction System (COAMPS). The ESMF core development team is based at the NOAA Earth System Research Laboratory.

ESMF was originally designed for tightly coupled models (Hill et al. 2004). Tight coupling is exemplified by the data exchanges between the ocean and atmosphere components of a climate model: a large volume of data is exchanged frequently, and computational efficiency is a primary concern. Such models usually run on a single computer with hundreds or thousands of processors, low-latency communications, and a Unix-based operating system. Almost all components in these domains are written in Fortran, with just a few in C or C++. There are a large number of hardware vendors, compilers, and configurations that must be supported, and ESMF is regression tested nightly on >24 platforms.

As the ESMF customer base has grown to include modelers from other disciplines, such as hydrology and space weather, the framework has evolved to support other forms of coupling. For these modelers, ease of configuration, ease of use, and support for heterogeneous components may take precedence over performance. Heterogeneity here refers to programming language (Python, Java, etc. in addition to Fortran and C), function (components for analysis, visualization, etc.), grids and algorithms, and operating systems. In response, the ESMF team has introduced support for the Windows platform, and is exploring an approach to language interoperability through a simple switch that converts components to web services. It has introduced more general data structures, including an unstructured mesh, and several strategies for looser coupling, in which components may be in separate executables, or running on different computers.

The ESMF architecture is based on the concept of component software elements. Components are ideally suited for the representation of a system comprised of a set of substantial, distinct and interacting elements, such as the atmosphere, land, sea ice and ocean, and their sub-processes. Component-based software is also well suited for the manner in which Earth system models are developed and used. The multiple domains and processes in a model are usually developed as separate codes by specialists. The creation of viable applications requires the integration, testing and tuning of these pieces, a scientifically and technically formidable task. When each piece is represented as a component with a standard interface and behavior, that integration, at least at the technical level, is more straightforward. Interoperability of components is a primary concern for researchers, since they are motivated to explore and maintain alternative versions of algorithms (such as different implementations of the governing fluid equations of the atmosphere), whole physical domains (such as oceans), parameterizations (such as convection schemes), and configurations (such as a standalone version of the atmosphere).

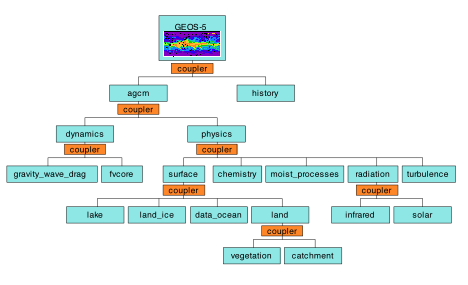

There are two types of components in ESMF, Gridded Components and Coupler Components. Gridded Components (ESMF_GridComps) wrap the scientific and computational functions in a model, and Coupler Components (ESMF_CplComps) wrap the operations necessary to transform and transfer data between them. ESMF Components can be nested, so that parent components can contain child components with progressively more specialized processes or refined grids.

As an Earth system model steps forward in time, the physical domains represented by Gridded Components must periodically transfer interfacial fluxes. The operations necessary to couple Gridded Components together may involve data redistribution, spectral or grid transformations, time averaging, and/or unit conversions. In ESMF, a Coupler Component encapsulates these interactions. Coupler Components share the same standard interfaces and arguments as Gridded Components. A key data structure in these interfaces is the ESMF_State object, which holds the data to be transferred between components.

Each Gridded Component is associated with an import State, containing the data required for it to run, and an export State, containing the data it produces. Coupler Components arrange and execute the transfer of data from the export States of producer Gridded Components into the import States of consumer Gridded Components. The same Gridded Component can be a producer or consumer at different times during model execution.

Both Gridded and Coupler Components are implemented in the Fortran interface as derived types with associated modules. ESMF itself does not currently contain fully prefabricated Gridded or Coupler Components - the user must connect the wrappers in ESMF to scientific content. Tools available in ESMF include methods for time advancement, data redistribution, on-line and off-line calculation of interpolation weights, application of interpolation weights via a sparse matrix multiply, and other common modeling functions.

Coupler Component arrangements can vary for ESMF applications. Multiple couplers may be included in a single modeling application. This is a natural strategy when the application is structured as a hierarchy of components. Each level in the hierarchy usually has its own set of Coupler Components. Figure 1.4 shows the arrangement of components in the GEOS-5 model.

Design goals for ESMF applications include the ability to use the same Gridded Component in multiple contexts, to swap different implementations of a Gridded Component into an application, and to assemble and extend coupled systems easily.

A design pattern that addresses these goals is the mediator, in which one object encapsulates how a set of other objects interact (Gamma 1995[8]). The mediator serves as an intermediary, and keeps objects from referring to each other explicitly. ESMF Coupler Components are intended to follow this pattern, important as it enables the Gridded Components in an application to be deployed in multiple contexts without changes to their source code. The mediator pattern promotes a simplified view of inter-component interactions. The mediator encapsulates all the complexities of data transformation between components. However, this can lead to excessive complexity within the mediator itself. ESMF has addressed this issue by encouraging users to create multiple, simpler Coupler Components and embed them in a predictable fashion in a hierarchical architecture, instead of relying on a single central coupler. This approach is useful for modeling complex, interdependent Earth system processes, since the interpretation of results in a many-component application may rely on a scientist's ability to grasp the flow of interactions system-wide.

Computational environment and throughput requirements motivate another set of design strategies. ESMF component wrappers must not impose significant overhead (generally less than 5%), and must operate efficiently on a wide range of computer architectures, including desktop computers and petascale supercomputers. To satisfy these requirements, the ESMF software relies on memory-efficient and highly scalable algorithms (e.g., Devine, 2002[9]). ESMF has proven to run efficiently on tens of thousands of processors in various performance analyses.

How the components in a modeling application are mapped to computing resources can have a significant impact on performance. Strategies vary for different computer architectures, and ESMF is flexible enough to support multiple approaches. ESMF components can run sequentially (one following the other, on the same computing resources), concurrently (at the same time, on different computing resources), or in combinations of these execution modes. Most ESMF applications run as a single executable, meaning that all components are combined into one program. Starting at a top-level driver, each level of an ESMF application controls the partitioning of its resources and the sequencing of the components of the next lower level.

It is not necessary to rewrite the internals of model codes to implement coupling using ESMF. Model code attaches to ESMF standard component interfaces via a user-written translation layer that connects native data structures to ESMF data structures. The steps in adopting ESMF are summarized by the acronym PARSE:

- Prepare user code. Split user code into initialize, run, and finalize methods and decide on components, coupling fields, and control flow.

- Adapt data structures. Wrap native model data structures in ESMF data structures to conform to ESMF interfaces.

- Register user methods. Attach user code initialize, run, and finalize methods to ESMF components through registration calls.

- Schedule, synchronize, and send data between components. Write couplers using ESMF redistribution, sparse matrix multiply, regridding, and/or user-specified transformations.

- Execute the application. Run components using an ESMF driver.

Immediate ESMF plans include enabling the framework to generate conservative interpolation weights for a wide variety of grids, and improving handling of masking and fractional areas. Implementation of a high-performance I/O package is also a near-term priority. Looking to the longer term, the ESMF team is exploring the use of metadata to broker and automate coupling services, and to enable self-describing model runs.

Community Surface Dynamics Modeling System

The Community Surface Dynamics Modeling System (CSDMS) develops, supports, and disseminates integrated software modules that predict the movement of fluids (wind, water, and ice) and the flux (production, erosion, transport, and deposition) of sediment and solutes in landscapes, seascapes and their sedimentary basins. CSDMS is an integrated community of experts to promote the quantitative modeling of earth-surface processes. The CSDMS community comprised >350 members from 76 U.S. Academic Institutions, 17 US Federal labs and agencies, 63 non-U.S. Institutes from 17 countries, and an industrial consortia of 11 companies. CSDMS operates under a cooperative agreement with the National Science Foundation (NSF) with other financial support through industry and agencies. CSDMS serves this diverse community by promoting the sharing and re-use of high-quality, open-source modeling software. The CSDMS Model Repository comprises a searchable inventory of >100 contributed models (>3 million lines of code). CSDMS employs state-of-the-art software tools that make it possible to convert stand-alone models into flexible, "plug-and-play" components that can be assembled into larger applications. The CSDMS project also serves as a migration pathway for surface dynamics modelers towards high-performance computing (HPC). CSDMS offers much more than a national Model Repository and model-coupling framework (e.g. CSDMS Data Repository, CSDMS Education & Knowledge Transfer Repository) but this paper focuses on the former only.

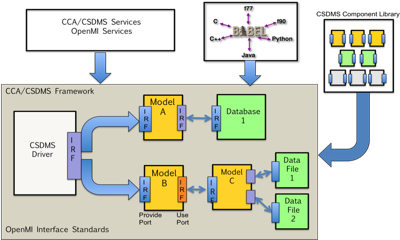

The CSDMS Framework is predicated on the tools of the Common Component Architecture (CCA) that are used to convert member-contributed code into linkable components (Hutton et al., 2010[10]). CCA is a component architecture standard adopted by the U.S. Department of Energy and its national labs and many academic computational centers to allow software components to be combined and integrated for enhanced functionality on high-performance computing (HPC) systems (Kumfert et al. 2006[11]). CCA fulfills an important need of scientific, high-performance, open-source computing (Armstrong et al. 1999[3]). Software tools developed in support of the CCA standard are often referred to as the "CCA tool chain", and include those adopted by CSDMS: Babel, Bocca and Ccaffeine.

Babel is an open-source, language interoperability tool (and compiler) that automatically generates the "glue code" that is necessary in order for components written in different computer languages to communicate (Dahlgren et al. 2007[12]). It currently supports C, C++, Fortran (77, 90, 95 and 2003), Java and Python. Almost all of the surface dynamics models in the contributed CSDMS Model Repository are written in one of these languages. Babel enables passing of variables with data types that may not normally be supported by the target language (e.g. objects, complex numbers). In order to create the glue code that is needed in order for two components written in different programming languages to "communicate" (or pass data between them), Babel only needs to know about the interfaces of the two components. It does not need any implementation details. Babel can ingest a description of an interface in either of two fairly "language neutral" forms, XML (eXtensible Markup Language), or SIDL (Scientific Interface Definition Language) that provides a concise description of a scientific software component interface. This description includes the names and data types of all arguments and the return values for each member function. SIDL has a complete set of fundamental data types to support scientific computing, including booleans, double precision complex numbers, enumerations, strings, objects, and dynamic multi-dimensional arrays. SIDL syntax is very similar to Java.

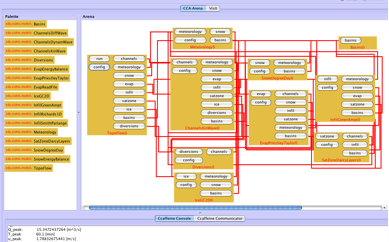

Bocca helps create, edit and manage CCA components and ports associated with a particular project, and is a key tool that CSDMS software engineers use when converting user-contributed code into plug-and-play components for use by CSDMS members. Bocca is a development environment tool that allows rapid component prototyping while maintaining robust software- engineering practices suitable to HPC environments. It operates in a language-agnostic way by automatically invoking the lower-level Babel tool. Bocca can be used interactively at a Unix command prompt or within shell scripts. Once CCA-compliant components and ports are prepared using Bocca, CSDMS members can then assemble models into applications with the CSDMS Modeling Framework that employs Ccaffeine (Fig. 1.5).

Ccaffeine is one that is most widely used CCA-compliant frameworks. Ccaffeine has a simple set of scripting commands that are used to instantiate, connect and disconnect CCA-compliant components. There are at least three ways to use Ccaffeine: (1) at an interactive command prompt, (2) with a "Ccaffeine script", or (3) with a GUI that creates a Ccaffeine script. The GUI is especially helpful for new users and for demonstrations and simple prototypes, while scripting is often faster for programmers and provides them with greater flexibility. The GUI allows users to select components from a palette and drag them into an arena. Components in the arena can be connected to one another by clicking on buttons that represent their ports (Fig. 1.6). Component with a "config" button allow its parameters to be changed in a tabbed dialog with access to a HTML help pages. Once components are connected, clicking on a “run” button on the “driver” component starts the application. The CSDMS Modeling Tool offers significant extensions and improvements to the basic Ccaffeine GUI, including offering a powerful, open-source (DOE) visualization package called VisIt, that is specifically designed for HPC use with multiple processors.

CSDMS employs some of the OpenMI interface standard (OpenMI version 2.0 to be released soon is more closely aligned with the needs of CSDMS than version 1.4). CSDMS components have the member functions required for an OpenMI-style "IRF interface" as well as the member functions required for use in a CCA-compliant framework such as Ccaffeine. OpenMI provides for an open-source interface standard for environmental and hydrologic modeling (Gregersen et al. 2007[13]). An IRF interface lies at the core of OpenMI, with additional member functions for dealing with various differences between models (e.g. units, timestepping and dimensionality). While OpenMI is primarily an interface standard that transcends any particular language or operating system, its developers have also created a software development kit (or SDK) that contains many tools and utilities that make it easier to implement the OpenMI interface. Both the interface and the SDK are available in C# and Java. The C# version is intended for use in Microsoft's .NET framework on a PC running Windows. CSDMS has incorporated key parts of the OpenMI interface (and the Java version of the SDK) into its component interface.

In summary, the suite of services, architecture and framework offered by a mixture of OpenMI and CCA tools and standards forms the basis of the CSDMS Modeling Framework (Fig. 1.5). By downloading the CSDMS GUI, users are able to (1) rapidly build new applications from the set of available components, (2) run their new application on the CSDMS supercomputer, (3) visualize their model output, and (4) download their model runs to their personal computers and servers. The CSDMS GUI supports: (1) platform-independent GUI (Linux, OSX & Windows), (2) parallel computation (via MPI standard), (3) language interoperability, (4) legacy (non-protocol) code and structured code (procedural and object-oriented), (5) interoperability with other coupling frameworks, (6) both structured and unstructured grids, and (7) offers a large offering of open-source tools.

Major Challenges

Voinov et al.[14] (in press) recently outlined technical challenges that community-modeling efforts that address earth-surface dynamics: 1) fundamental algorithms to describe processes; 2) software to implement these algorithms; 3) software for manipulating, analyzing, and assimilating observations; 4) standards for data and model interfaces; 5) software to facilitate community collaborations; 6) standard metadata and ontologies to describe models and data, and 7) improvements in hardware. Voinov et al.[14] (in press) wisely noted that perhaps the most difficult challenges may be social or institutional. Among their many recommendations we emphasize the following:

- As a requirement for receiving federal funds, code is to be open source, and accessible through model repositories.

- The production of well-documented, peer-reviewed code is worthy of merit at all levels.

- Develop effective ways of for peer-review, publication, and citation of code, standards, and documentation.

- Recognize at all levels the contributions to community modeling and cyber-infrastructure efforts.

- Use/adapt existing tools first before duplicating these efforts; adopt existing standards for data, model input and output, and interfaces.

- Employ software development practices that favor transparency, portability, and reusability, and include procedures for version control, bug tracking, regression testing, and release maintenance.

References

- ↑ Wilson, G. and Lumsdaine, A. (2008) Software engineering and computational science. Computing in Science and Engineering 11: 12-13.

- ↑ McMail, T.C. 2008. Next-generation research and breakthrough innovation: indicators from US academic research. Computing in Science and Engineering 11: 76-83.

- ↑ 3.0 3.1 Armstrong, R., Gannon, D., Geist, A., et al (1999), Toward a common component architecture for high-performance scientific computing. Proceedings of the 8th Intl. Symposium on High Performance Distributed Computing. 1999, 115-124.

- ↑ Maidment, D. R. (2008), Bringing Water Data Together, J. Water Resour. Plng. and Mgmt., 134(2), 95, doi:10.1061/(ASCE)0733-9496(2008)134:2(95).

- ↑ Erl, T. (2005), Service-Oriented Architecture: Concepts, Technology, and Design, Prentice Hall PTR, Upper Saddle River, NJ, USA.

- ↑ 6.0 6.1 Tarboton, D. G., J. S. Horsburgh, D. R. Maidment, et al. (2009), Development of a Community Hydrologic Information System, in 18th World IMACS / MODSIM Congress, Cairns, Australia.

- ↑ Horsburgh, J. S., D. G. Tarboton, D. R. Maidment, and I. Zaslavsky (2008), A relational model for environmental and water resources data, Water Resour. Res., 44(5), doi:10.1029/2007WR006392.

- ↑ Gamma, E., Helm, R., Johnson, R., and Vlissides, J. (1995), Design Patterns: Elements of Reusable Object-Oriented Software. Addison-Wesley.

- ↑ Devine, K., E. Boman, R. Heaphy, B. Hendrickson, and C. Vaughn (2002), "Zoltan: Data management services for parallel dynamic applications," Computing in Science and Engineering, vol. 4, no. 2.

- ↑ Hutton, E.W.H., J.P.M. Syvitski & S.D. Peckham, 2010, Producing CSDMS-compliant Morphodynamic Code to Share with the RCEM Community. In: Vionnet et al. (eds) River, Coastal and Estuarine Morphodynamics RCEM 2009, Taylor & Francis Group, London, ISBN 978-0-415-55426-CRC Press, p. 959-962.

- ↑ Kumfert, G., D.E. Bernholdt, T. Epperly, et al. (2006) How the Common Component Architecture advances computational science, Journal of Physics: Conference Series, 46, 479-493.

- ↑ Dahlgren, T., T. Epperly, G. Kumfert and J. Leek (2007) Babel User’s Guide, March 23, 2007 edition, Center for Applied Scientific Computing, U.S. Dept. of Energy and University of California Lawrence Livermore National Laboratory, 269 pp.

- ↑ Gregersen, J.B., Gijsbers, P.J.A., and Westen, S.J.P. (2007) OpenMI : Open Modeling Interface. Journal of Hydroinformatics, 9(3), 175-191.

- ↑ 14.0 14.1 Voinov, C. DeLuca, R. Hood, S. Peckham, C. Sherwood, J.P.M. Syvitski, in press, Community Modeling in Earth Sciences. EOS Transactions of the AGU.