Help:Tools CSDMS Handbook

CSDMS Handbook of Concepts and Protocols: A Guide for Code Contributors

by Scott Peckham

Introduction

The Community Surface Dynamics Modeling System (CSDMS) is community software project that is funded through a cooperative agreement with the National Science Foundation (NSF). A major goal of this project is to serve a diverse community of surface dynamics modelers by providing resources to promote the sharing and re-use of high-quality, open-source modeling software. In support of this goal, the CSDMS Integration Office maintains a searchable inventory of contributed models and employs state-of-the-art software tools that make it possible to convert stand-alone models into flexible, "plug-and-play" components that can be assembled into larger applications. The CSDMS project also has a mandate from the NSF to provide a migration pathway for surface dynamics modelers towards high-performance computing (HPC) and will soon be acquiring a supercomputer for use by its members.

The purpose of this document is to provide background and guidance to modelers who wish to understand and get involved with the CSDMS project, usually by contributing source code. It is expected that a code contributor will be familiar with some basic computer science concepts and at least one programming language. However, this document does not assume that the reader is familiar with object-oriented or component-based programming so these key concepts are explained in some detail. Important concepts, terminology and tools are explained at an introductory level to help readers get up and running quickly. For more detail on any given topic, readers are encouraged to utilize online resources like Wikipedia and the numerous links that have been embedded in this document. In addition to explaining key concepts, this handbook also contains a number of step-by-step "how-to" sections and examples. While these are provided for completeness, it is expected that readers will skip over any sections that are not of immediate interest. The References section at the end provides a list of papers and books that CSDMS staff has found to be particularly helpful or well-written and not all of them are cited in the text. Those about to contribute a model to the CSDMS project may wish to jump directly to the section called Requirements for Code Contributors.

The main problem that CSDMS aims to address is that while the surface dynamics modeling community has lots of models, it is difficult to couple or reconfigure them to solve new problems. This is because they are an inhomogeneous set in the sense that:

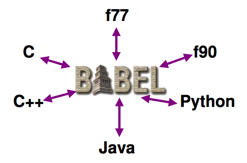

- They are written in many different languages, some object-oriented, some procedural, some compiled, some interpreted, some proprietary, some open-source, etc.

- These languages don’t all offer the same data types and other features, so special tools are required to create “glue code” necessary to make function calls across the language barrier.

- They weren’t designed to “talk” to each other and don’t follow any particular set of conventions.

- We can’t rewrite all of them (rewriting/debugging is too costly) and this takes “ownership” away from model developers.

- Some explicit, some implicit.

Some of the "functional specs" or "technical goals" of the CSDMS project are therefore:

- Support for multiple operating systems (especially Linux, Mac OS X and Windows)

- Support for parallel computation (multi-proc., via MPI standard)

- Language interoperability to support code contributions written in C & Fortran as well as more modern object-oriented languages (e.g. Java, C++, Python) (CCA is language neutral)

- Support for both legacy code (non-protocol) and more structured code submissions (“procedural” and object-oriented)

- Should be interoperable with other coupling frameworks

- Support for both structured and unstructured grids

- Platform-independent GUIs and graphics where useful

- Large collection of open-source tools

Why is it Often Difficult to Link Models?

Linking together models that were not specifically designed from the outset to be linkable is often surprisingly difficult and a brute force approach to the problem often requires a large time investment. The main reason for this is that there are a lot of ways in which two models may differ. The following list of possible differences helps to illustrate this point.

- Written in different languages (conversion is time-consuming and error-prone).

- The person doing the linking may not be the author of either model and the code is often not well-documented or easy to understand.

- Models may have different dimensionality (1D, 2D or 3D)

- Models may use different types of grids (rectangles, triangles, polygons)

- Each model has its own time loop or "clock".

- The numerical scheme may be either explicit or implicit.

- The type of coupling required poses its own challenges. Some common types of model coupling are:

- Layered = A vertical stack of grids that may represent:

- different domains (e.g atm-ocean, atm-surf-subsurf, sat-unsat),

- subdivision of a domain (e.g stratified flow, stratigraphy),

- different processes (e.g. precip, snowmelt, infil, seepage, ET)

- A good example is a distributed hydrologic model.

- Nested = Usually a high-resolution (and maybe 3D) model that is embedded within (and may be driven by) a lower-resolution model. (e.g. regional winds/waves driving coastal currents, or a 3D channel flow model within a landscape model)

- Boundary-coupled = Model coupling across a natural (possibly moving) boundary, such as a coastline. Usually fluxes must be shared across the boundary.

- Layered = A vertical stack of grids that may represent:

Requirements for Code Contributors

The CSDMS project is happy to accept open-source code contributions from the modeling community in any programming language and in whatever form it happens to be in. One of our key goals is to create an inventory of what models are available. We use an online questionnaire to collect basic information about different open-source models and we make this information available to anyone who visits our website at csdms.colorado.edu. We can also serve as a repository for model source code, but in many cases our website instead redirects visitors to another website which may be a website maintained by the model developer or a source code repository like SourceForge, JavaForge or Google Code. Online source code repositories (or project hosting sites) like these are free and provide developers with a number of useful tools for managing collaborative software development projects. CSDMS does not aim to compete with the services that these repositories provide (e.g. version control, issue tracking, wikis, online chat and web hosting).

Another key goal of the CSDMS project is to create a collection of open-source, earth-science modeling components that are designed so that they are relatively easy to reuse in new modeling projects. CSDMS has studied this problem and has examined a number of different technologies for addressing it. We have learned that there are certain fundamental design principles that are common to all of these model-coupling technologies. That is, there is a certain minimum amount of code refactoring that is necessary in order for a model to be usable as a "plug-and-play" component. This is encouraging, because CSDMS does not want to recommend any particular course of action or protocol to our code contributors unless there are sound reasons for doing so (such as broad applicability). The recommendations that we give here may be viewed as a distillation of what is common among the various approaches to model coupling.

1. Code must be in a Babel-supported language

The programming languages that are currently supported by Babel are: C, Fortran (77, 95, 2003), C++, Java and Python. We realize that there are also a significant number of models that are written in proprietary, array-based languages like MatLab and IDL (Interactive Data Language). CSDMS has identified an alpha version of an open-source software tool called I2PY that converts IDL to Python and has greatly extended it. This tool maps array-based IDL functions and procedures to similar ones (with similar performance) that are available in a Python module called NumPy (Numerical Python) and maps IDL plotting commands to similar ones in the Matplotlib module. I2PY is written in Python and is built upon standard Unix-based parsing tools such as yacc and lex. CSDMS hopes to leverage the I2PY conversion tool into a new tool, perhaps called M2PY, which can similarly convert MatLab code to Python.

Note that, in general, converting source code from one language to another is a tedious, time-consuming and error-prone process. It is not something that can be fully automated, even when the two languages are similar and conversion tools are used. For two languages that are quite different, the conversion process is even more complicated. CSDMS simply does not have the resources to do this. More importantly, however, converting source code to a new language leads to a situation where it is difficult for CSDMS to incorporate bug fixes and ongoing improvements to the model by its developer. Ideally, primary responsibility for a model (i.e. "problem ownership") should remain with the developer or team that created it, and they should be encouraged to use whatever language they prefer and are most productive in. However, by requiring relatively simple and one-time changes to how the source code is modularized, CSDMS gains the ability to apply an automated wrapping process to the latest stable version of the model and begin using it as a component.

2. Code must compile with a CSDMS-supported, open-source compiler

Supporting more than one compiler for each of the Babel-supported languages would require more resources than CSDMS has available with regard to testing and technical support. However, it is usually fairly straightforward for code developers to modify their own code so that it will compile with an open-source compiler like gcc. CSDMS intends to provide online tips and other resources to assist developers with this process.

3. Refactor source code to have an "IRF interface"

One "universal truth" of component-based programming is that in order for a model to be used as a component in another model, its interface must allow complete control to be handed to an external caller. Most earth-science models have to be initialized in some manner and then use time stepping or another form of stepping in order to compute a result. While time-stepping models are the most familiar, many other problems such as root-finding and relaxation methods employ some type of iteration or stepping. For maximum plug-and-play flexibility, it is necessary to make the actions that take place during a single step directly accessible to a caller.

To see why this is so, consider two time-stepping models. Suppose that Model A melts snow, routes the runoff to a lake, and increases the depth of the lake while Model B computes lake-level lowering due to evaporative loss. Each model initializes the lake depth, has its own time loop and changes the lake depth. If each of these models is written in the traditional manner, then combining them into a single model means that whatever happens inside the time loop of Model A must be pasted into Model B's time loop or vice versa. There can only be one time loop. This illustrates, in its simplest form, a very common problem that is encountered when linking models. Now imagine that we restructure the source code of both models slightly so that they each have their own Initialize(), Run_Step() and Finalize() subroutines. The Initialize routine contains all of the code that came before the time loop in the original model, the Run_Step() routine contains the code that was inside the time loop (and returns all updated variables) and the Finalize() routine contains the code that came after the time loop. Now suppose that we write one additional routine, perhaps called Run_Model() or Main(), which simply calls Initialize(), starts a time loop which calls Run_Step() and then calls Finalize(). Calling Run_Model() reproduces the functionality of the original model, so we have made a fairly simple, one-time change to our two models and retained the ability to use them in "stand-alone mode." Future enhancements to the model simply insert new code into this new set of four subroutines. However, this simple change means that, in effect, we have converted each model into an object with a standard set of four member functions or methods. Now, it is trivial to write a new model that combines the computations of Model A and B. This new model first calls the Initialize() methods of Model A and B, then starts a time loop, then calls the Run_Step() methods of Model A and B, and finally calls the Finalize() methods of Model A and B. For models written in an object-oriented language, these four subroutines would be methods of a class, but for other languages, like Fortran, it is enough to simply break the model into these subroutines. Object-oriented programming concepts are reviewed in the next section.

For lack of a better term, we refer to this Initialize(), Run_Step(), Finalize() pattern as an "IRF interface". This basic idea could be taken further by adding a Test() method. It is also helpful to have two additional methods, perhaps called Get_Input_Exchange_Item_List() and Get_Output_Exchange_Item_List(), that a caller can use to query what type of data the model is able to use as input or compute as output. While simple, these changes allow a caller to have fine-grained control over our model, and therefore use it in clever ways as part of something bigger. In essence, this set of methods is like a handheld remote control for our model. Compiling models in this form as shared objects (.so, Unix) or dynamically-linked libraries (.dll, Windows) is one way that they can then be used as plug-ins.

4. Provide complete descriptions of input and output "exchange items"

More complex models may involve large sets of input and output variables, which are referred to in OpenMI as "exchange items." For each input and output exchange item, we require that a few attributes be provided, such as the item's name, units and description. We also require a description of the computational grid (e.g. XY corner coordinates for every computational cell). CSDMS staff plans to develop an XML schema that can be used to provide this information in a standardized format. XML files of this type will be used by automated wrapping tools that convert IRF-form models into OpenMI-compliant components. CSDMS is currently developing these wrapping tools.

5. Include suitable testing procedures and data

In view of the previous requirement, it would be ideal if every model submission not only had an IRF interface but included one or more "self-tests" in the form of a member function. One of these self-tests could simply be a "sanity check" that operates on trivial input data (perhaps even hard-coded). When analytic solutions are available for a particular model, these make excellent self-tests because they can be used to check the accuracy and stability of any numerical methods that are used.

6. Include a user's guide or at least basic documentation

There is no substitute for good documentation. While good documentation is typically difficult to write, it does result in a net time savings for the model developer (by preventing technical support questions) and causes the model to become adopted more rapidly.

7. Specify what type of open-source license applies to your source code.

The CSDMS project is focused on open-source software, but there are now many different open-source license types to choose from, each differing with regard to the details of how others may use your code. The CSDMS Integration Office needs to know this information in order to respect your intellectual property rights. Rosen (2004) is a good, online and open-source book that explains open source licensing in detail. CSDMS requires that contributions have an open source license type that is compliant with the standard set forth by the Open Source Initiative (OSI).

8. Use standard or generic file formats whenever possible for input and output

XML and INI are two examples of widely-used file formats that are flexible and standardized. You can learn more about XML in the section of this handbook titled "What is XML?".

9. Apply a CSDMS automated wrapping tool

Apply a CSDMS automated wrapping tool to the IRF version of your model (and its XML metadata) to create a CSDMS-compliant component. CSDMS is currently developing these wrapping tools. Our approach is to use the OpenMI interface standard within a CCA-compliant model-coupling framework such as Ccaffeine. OpenMI and Ccaffeine are explained in subsequent sections.

Review of Object-Oriented Programming Concepts

To understand component-based programming, which is the subject of the next section, it is necessary to first understand the basic concepts of object-oriented programming. Readers already familiar with object-oriented programming may wish to skip to the next section. This section provides only a brief discussion of object-oriented programming but builds up the key concepts in essentially the same way that they were developed historically. Readers interested in learning more can find a wealth of materials online by typing any term highlighted here into a search engine or an online encyclopedia like Wikipedia.

Data Types

When you stop to think about it, it is amazing how quickly programming languages have evolved during the relatively short time that computers have been around. To get a sense of the timespan involved, note that Fortran (The IBM Mathematical Formula Translation System) was developed in the 1950s by IBM as an alternative to assembly language for scientific computing. ALGOL (Algorithmic Language) followed in the mid 1950s and Lisp was introduced in 1958. BASIC (Beginners All-purpose Symbolic Instruction Code) was developed for use by non-science students at Dartmouth in 1964. The first personal or home computers (RadioShack TRS-80, Commodore PET and Apple II) became available in 1977 and were usually equipped with BASIC.

These early languages allowed users to work with different types of data or data types, such as boolean or logical (true/false), integers and floating-point (or decimal) numbers. These are now referred to as primitive data types (or basic types) and are available in every computer language. Early on, it was possible to store either a single number or an array of numbers as long as every number in the array had the same primitive type. Soon after, data types to store textual characters (letters, digits, symbols and white space) and arrays of characters or strings were introduced. Fortran also introduced a separate data type for complex numbers, which are simply pairs of decimal numbers (for the real and imaginary parts).

As computer programs became more complex, two important themes began to emerge, and these led to the introduction of new computer languages and to the eventual extension of existing languages. One theme was that of structured or modular programming, in which the goal was to streamline code design in ways that made it easier to understand, extend and maintain. This meant breaking large programs into smaller units and doing away with (or at least recommending against) goto statements in favor of for, while and repeat loops. A second major theme had to do with allowing programmers to create their own composite data types (or user-defined types) from the primitive data types. For example, Pascal was introduced in 1970 and allowed users to define their own data types called records. Records are collections of variables that may have different data types but that can be grouped together under a single name and treated as a single unit. In Pascal, the individual variables in the record are referred to as fields. Similarly, C was introduced in 1972 at Bell Labs and allowed users to define their own data types called structures, with the component variables being called members. The classic example of the kind of data that can be stored effectively in a record is employee data. Here, the individual fields might include things like the employee's name, title, date of birth, salary, address, phone number and so on. For each of these fields, there is a particular primitive type that is appropriate for storing the data, such as a string for the name and a floating-point number for the salary. Just as with the primitive types, it is then possible to work with arrays of records (or structures). Such an array could store the data for all employees that work for a particular company. With records and structures a new, simple and yet powerful syntax was introduced which is still in use today. In this syntax, a dot is placed between the record name and the field name, as in "employee.name", "circle.radius" or "csdms.colorado.edu".

Object-oriented programming can be viewed as a natural and yet very powerful extension of the concept of composite data types. The key idea is to bundle not only related items of data but also one or more functions that act on this data, into a single, user-defined type. Just as the terms structure (in C) and record (in Pascal) refer to user-defined "bundles of data" (and the keywords struct and record are used in code when defining these new data types), the term class is used in most (if not all) object-oriented languages to refer to this new type of bundle which can potentially contain both data and associated functions. (Note that a class doesn't need to have any functions, and in a sense even the primitive data types can be viewed as trivial classes.) You define a class just as you would define a new data type, and then individual "variables" that have this data type are referred to as objects or members of the class. In C++, the terms member data and member function are used to refer to data items and functions that are associated with a class. The analogous terms in Java are fields (as in Pascal) and methods. For example, you might define a class called SongWriter, which has fields called "number_of_songs" and "biggest_hit" and methods called "sing" and "write". You could then instantiate two objects of the SongWriter type with names like DonnieIris and Shakira. In C++, a structure (struct) is the same as a class, except that the members of a structure are all public by default while the members of a class are all private by default.

Footnote: Technically, the syntax of an object-oriented programming language only makes it look as though the functions are bundled with the data. One can think of the member functions as only having one instance, which is part of the class definition rather than part of each individual object. Regardless of how this illusion is actually achieved, however, this bundling allows the data and a set of associated functions to be accessed under a single name, and with a similar syntax.

The first object-oriented language is considered to be Simula 67, which was standardized in 1967. It introduced objects, classes, subclasses, virtual methods, coroutines and also featured garbage collection. It wasn't until twelve years later, in 1979, that the language "C with Classes" was developed. This new language, which was renamed to C++ in 1983, was directly influenced by Simula 67 and played a key role in helping object-oriented programming to become as widespread as it is today. Python, Java and C# are some of the most popular object-oriented languages today, but were introduced much more recently (1991, 1995 and 2001, respectively). Languages that are not object-oriented, such as C and Fortran, are often referred to as procedural languages. Keep in mind, however, that the member functions of a class are still just subroutines or procedures.

Finish this section with short discussion about: Typecasting, return type, interfaces, need for glue code

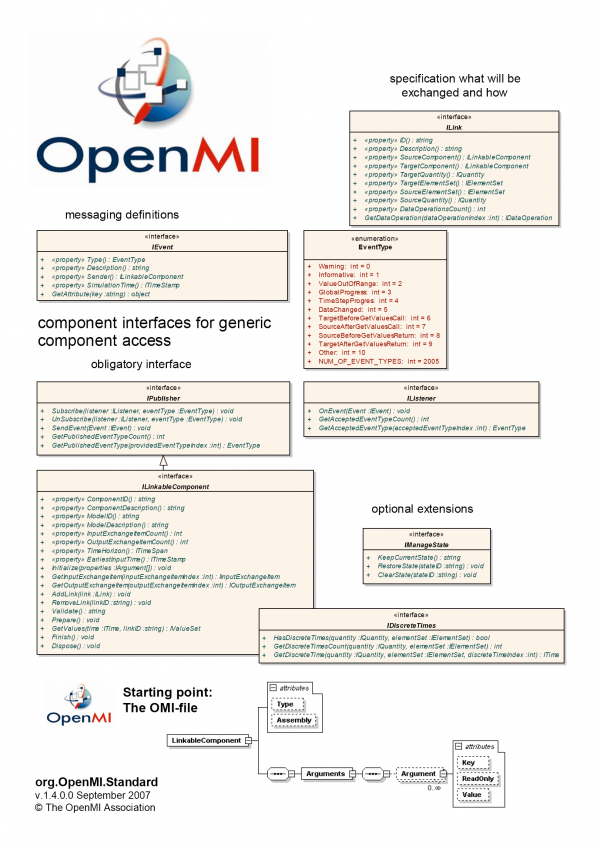

UML Class Diagrams

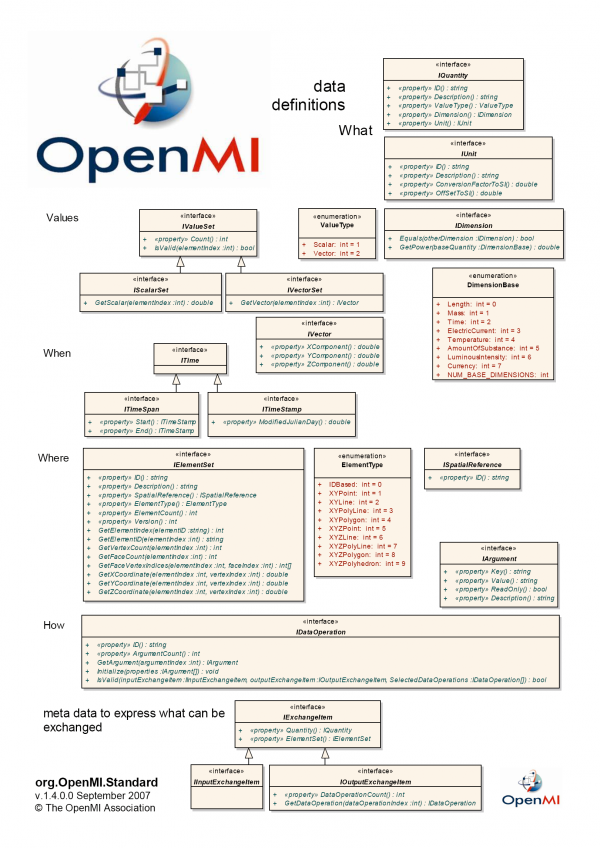

UML stands for Unified Modeling Language and is essentially a set of rules for creating "box diagrams" or "blueprints" that show class attributes and relationships between classes (such as inheritance) in an object-oriented application. You can learn more about UML at: [1]. An example of a fairly complicated UML diagram is given in Appendix B: UML Class Diagram for OpenMI.

Namespaces and Scope

Package naming convention, reverse URL, to ensure a unique namespace: e.g.

- gov.cca.Services,

- org.oms.model.components,

- Oatc.OpenMI.Sdk.Backbone,

- edu.colorado.csdms.utils (or org.csdms.utils ??),

Information Hiding and Encapsulation

Access levels: private/public/friendly/protected

- public: a field, method, or class that is accessible to every class.

- protected: a field, method, or class that is accessible to the class itself, subclasses, and all classes in the same package or directory.

- friendly: a field, method, or class that is accessible to the class itself and to all classes in the same package or directory. Note that friendly is not a separate keyword. A field or method is declared friendly by virtue of the absence of any other access modifiers.

- private: a field or method that is accessible only to the class in which it is defined. Note that a class cannot be declared private as a whole.

Given the fundamental idea of classes and their associated data and functions, there are a number of other concepts that follow more or less naturally. These are important but are not necessarily critical to understanding what component-based programming is all about. The interested reader may wish to look up the following terms in Wikipedia: inheritance, polymorphism, overloading, accessors (setters and getters), constructors and destructors.

Inheritance

Polymorphism and Overloading

What is CCA?

Common Component Architecture (CCA) is a component architecture standard adopted by federal agencies (largely the Department of Energy and its national labs) and academics to allow software components to be combined and integrated for enhanced functionality on high-performance computing systems. The CCA Forum is a grassroots organization that started in 1998 to promote component technology standards (and code re-use) for HPC. CCA defines standards necessary for the interoperation of components developed in the context of different frameworks. That is, software components that adhere to these standards can be ported with relative ease to another CCA-compliant framework. While there are a variety of other component architecture standards in the commercial sector (e.g. CORBA, COM, .Net, JavaBeans, etc.) CCA was created to fulfill the needs of scientific, high-performance, open-source computing that are unmet by these other standards. For example, scientific software needs full support for complex numbers, dynamically dimensioned multidimensional arrays, Fortran (and other languages) and multiple processor systems. Armstrong et al. (1999) explain the motivation to create CCA by discussing the pros and cons of other component-based frameworks with regard to scientific, high-performance computing.

There are a number of large DOE projects, many associated with the SciDAC program (Scientific Discovery through Advanced Computing), that are devoted to the development of component technology for high-performance computing systems. Most of these are heavily invested in the CCA standard (or are moving toward it) and employ computer scientists and applied mathematicians. Some examples include:

- TASCS = The Center for Technology for Advanced Scientific Computing Software, which focuses on CCA and its associated tools ([2])

- CASC = Center for Applied Scientific Computing, which is home to CCA's Babel tool ([3])

- ITAPS = The Interoperable Technologies for Advanced Petascale Simulation, which focuses on meshing and discretization components, formerly TSTT ([4])

- PERI = Performance Engineering Research Institute, which focuses on HPC quality of service and performance issues ([5])

- TOPS = Terascale Optimal PDE Solvers, which focuses on PDE solver components ([6])

- PETSc = Portable, Extensible Toolkit for Scientific Computation, which focuses on linear and nonlinear PDE solvers for HPC, using MPI ([7])

CCA has been shown to be interoperable with ESMF and MCT, and CSDMS is currently working to make it interoperable with a Java version of OpenMI. For a list of papers that can help you learn more about CCA, please see our CCA Recommended Reading List.

The CCA Forum has also prepared a set of tutorials called "A Hands-On Guide to the Common Component Architecture" that you can find at:

CCA and Component Programming Concepts

Component-based programming is all about bringing the advantages of “plug and play” technology into the realm of software. When you buy a new peripheral for your computer, such as a mouse or printer, the goal is to be able to simply plug it into the right kind of port (e.g. a USB, serial or parallel port) and have it work, right out of the box. In order for this to be possible, however, there has to be some kind of published standard that the makers of peripheral devices can design against. For example, most computers nowadays have USB ports, and the USB (Universal Serial Bus) standard is well-documented. A computer's USB port can always be expected to provide certain capabilities, such as the ability to transmit data at a particular speed and the ability to provide a 5-volt supply of power with a maximum current of 500 mA. The result of this "standardization" is that it is usually pretty easy to buy a new device, plug it into your computer's USB port and start using it. Software "plug-ins" work in a similar manner, relying on the existence of certain types of "ports" that have certain, well-documented structure or capabilities. In software, as in hardware, the term component refers to a unit that delivers a particular type of functionality and that can be "plugged in".

Component programming builds upon the fundamental concepts of object-oriented programming, with the main difference being the introduction or presence of a framework. Components are generally implemented as classes in an object-oriented language, and are essentially "black boxes" that encapsulate some useful bit of functionality. They are often present in the form of a library file, that is, a shared object (.so file, Unix) or a dynamically linked library (.dll file, Windows) or (.dylib file, Macintosh). The purpose of a framework is to provide an environment in which components can be linked together to form applications. The framework provides a number of services that are accessible to all components, such as the linking mechanism itself. Often, a framework will also provide a uniform method of trapping or handling exceptions (i.e. errors), keeping in mind that each component will throw exceptions according to the rules of the language that it is written in. In a CCA-compliant framework, like Ccaffeine, there is a mechanism by which any component can be "promoted" to a framework service. It is therefore "preloaded" by the framework so that it is available to all components without the need to explicitly "import" or "link" to the "service component". A similar kind of thing happens in Python, where the "__builtin__" module/component is automatically imported when Python starts up so that all of its methods are available to every other module without them needing to first import it. Similarly again, in Java there are two packages that you never have to explicitly import, the "default" package and the "java.lang" package. So mathematical functions in the Math class, which is contained in "java.lang," are automatically available to every Java program.

There are a variety of different frameworks that adhere to the CCA component architecture standard, such as Ccaffeine, XCAT, SciRUN and Decaf. A framework can be CCA-compliant and still be tailored to the needs of a particular computing environment. For example, Ccaffeine was designed to support parallel computing and XCAT was designed to support distributed computing. Decaf was designed by the developers of Babel (Kumfert, 2003) primarily as a means of studying the technical aspects of the CCA standard itself. The important thing is that each of these frameworks adheres to the same standard, which makes it much easier to re-use a (CCA) component in another computational setting. The key idea is to try to isolate the components themselves, as much as possible, from the details of the computational environment in which they are deployed. If this is not done, then we fail to achieve one of the main goals of component programming, which is code re-use.

One thing that often distinguishes components from ordinary subroutines, software modules or classes is that they are able to communicate with other components that may be written in a different programming language. This problem is referred to as language interoperability or cross-language interoperability. In order for this to be possible, the framework must provide some kind of language interoperability tool that can create the necessary "glue code" between the components. For a CCA-compliant framework, that tool is Babel, and the supported languages are C, C++, Fortran (all years), Java and Python. Babel is described in more detail in a subsequent section. For Microsoft's .NET framework, that tool is CLR (Common Language Runtime) which is an implementation of an open standard called CLI (Common Language Infrastructure), also developed by Microsoft. Some of the supported languages are C# (a spin-off of Java), Visual Basic, C++/CLI, IronLisp, IronPython and IronRuby. CLR runs a form of bytecode called CIL (Common Intermediate Language). Note that CLI does not appear to support Fortran, Java, standard C++ or standard Python. The Java-based frameworks used by Sun Microsystems are JavaBeans and Enterprise JavaBeans (EJB). In the words of Armstrong et al. (1999): "Neither JavaBeans nor EJB directly addresses the issue of language interoperability, and therefore neither is appropriate for the scientific computing environment. Both JavaBeans and EJB assume that all components are written in the Java language. Although the Java Native Interface [34] library supports interoperability with C and C++, using the Java virtual machine to mediate communication between components would incur an intolerable performance penalty on every inter-component function call." (Note: It is possible that things have changed since the Armstrong paper was written.)

Interface vs. Implementation

The word "interface" may be the most overloaded word in computer science. In each case, however, it adheres to the standard, English meaning of the word that has to do with a boundary between two things and what happens at the boundary. The overloading has to do with the large numbers of pairs of things that we could be talking about. Many people hear the word interface and immediately think of the interface between a human and a computer program, which is typically either a command-line interface (CLI) or a graphical user interface (GUI). While this is a very interesting and complex subject in itself, this is usually not what computer scientists are talking about. Instead, they tend to be interested in other types of interface, such as the one between a pair of software components, or between a component and a framework, or between a developer and a set of utilities (i.e. an API or a software development kit (SDK)).

Within the present context of component programming, we are primarily interested in the interfaces between components. In this context, the word interface has a very specific meaning, essentially the same as how it is used in the Java programming language. (You can therefore learn more about component interfaces in a Java textbook.) It is a user-defined entity/type, very similar to an abstract class. It does not have any data fields, but instead is a named set of methods or member functions, each defined completely with regard to argument types and return types but without any actual implementation. A CCA port is simply this type of interface. Interfaces are the name of the game when it comes to the question of re-usability or "plug and play". Once an interface has been defined, one can then ask the question: Does this component have interface A? To answer the question we merely have to look at the methods (or member functions) that the component has with regard to their names, argument types and return types. If a component does "have" a given interface, then we say that it exposes or implements that interface, meaning that it contains an actual implementation for each of those methods. It is perfectly fine if the component has additional methods beyond the ones that comprise a particular interface. Because of this, it is possible (and frequently useful) for a single component to expose multiple, different interfaces or ports. For example, this may allow it to be used in a greater variety of settings. There is a good analogy in computer hardware, where a computer or peripheral may actually have a number of different ports (e.g. USB, serial, parallel, ethernet) to enable them to communicate with a wider variety of other components.

The distinction between interface and implementation is an important theme in computer science. The word pair declaration and definition is used in a similar way. A function (or class) declaration tells us what the function does (and how to interact with or use it) but not how it works. To see how the function actually works, we need to look at how it has been defined or implemented. C and C++ programmers will be familiar with this idea, where variables, functions, classes, etc. are often declared in a header file with the filename extension .h or .hpp (that is, data types of all arguments and return values are given) whereas they are defined in a separate file with extension .c or .cpp. (Discuss how the compiler uses this information.) Of course, most of the gadgets that we use every day (from iPods to cars) are like this. We need to understand their interfaces in order to use them (and interfaces are often standardized across vendors), but often we have no idea what is happening inside or how they actually work, which may be quite complex.

- forward declarations and function prototypes

- CCA uses and provides ports, interfaces

It is important to realize that the CCA standard and the tools in the CCA tool chain are powerful and quite general but they do not provide us with an interface for linking models. Each scientific modeling community that wishes to make use of these tools is responsible for designing or selecting component interfaces (or ports) that are best suited to the kinds of models that they wish to link together. This is a big job in and of itself that involves social as well as technical issues and typically requires a significant time investment. In some disciplines, such as molecular biology or fusion research, the models may look quite different from ours. Ours tend to follow the pattern of a 1D, 2D or 3D array of values (often multiple, coupled arrays) advancing forward in time. However, our models can still be quite different from each other with regard to their dimensionality or the type of computational grid that they use (e.g. rectangles, triangles or polygons), or whether they are implicit or explicit in time. Therefore, the CSDMS project requires a fairly sophisticated type of interface that can accommodate or "bridge over" these differences.

During the course of working on this interface problem, the CSDMS Integration Office has studied an open-source, modeling interface standard called OpenMI that appears to meet many of the needs of our project. It is not a perfect fit, however, since it was designed for single-processor systems and until now has only been implemented and tested on the Windows platform. One of the tasks required in order to use the OpenMI interface standard within a CCA-compliant framework is to write the code for creating an "OpenMI port". In order to do this, we converted a Java version of the OpenMI interface definition to a set of about 30 SIDL files. We then wrote a Bocca script (see How to Manage a Project with Bocca) that defines an OpenMI port. This script is given in Appendix C: A Bocca Script to Define an OpenMI Port. The UML class diagram for the OpenMI model interface is given in Appendix B: UML Class Diagram for OpenMI. OpenMI is described in the section titled What is OpenMI? and the references cited therein.

Advantages of Component-Based Programming

- Can be written in different languages and still communicate.

- Can be replaced, added to or deleted from an app. at run-time via dynamic linking.

- Can easily be moved to a remote location (different address space) without recompiling other parts of the application (via RMI/RPC support).

- Can have multiple different interfaces and can "have state" or be "stateful," which simply means that data stored in the fields of an "component object" (class instance) retain their values between method calls for the lifetime of the object.

- Can be customized with configuration parameters when application is built.

- Provide a clear specification of inputs needed from other components in the system.

- Have potential to encapsulate parallelism better.

- Allows for multicasting calls that do not need return values (i.e. sending data to multiple components simultaneously).

- With components, clean separation of functionality is mandatory vs. optional.

- Facilitates code re-use and rapid comparison of different methods, etc.

- Facilitates efficient cooperation between groups, each doing what they do best.

- Promotes economy of scale through development of community standards.

Example of a Water Tank Model with an IRF Interface

The purpose of this section is to present an example of a realistic but simple model that can be used to illustrate how components written in different programming languages can be linked together to create a model. (See Libii (2003) for a description of "water tank physics".) We will use this example to illustrate a number of different points. This is a time-stepping model that has an object-oriented design. It has an"IRF interface", as defined above. It reads the data it needs from an input file and prints results to the screen. It also uses Python's "self" convention for a class instance to refer to its own fields and methods (similar to the "this" pointer in C++ and "this" keyword in Java) and uses descriptive, easy-to-understand names for both fields (variables) and methods (functions).

We first define a class called "water_tank" in Python that has the following methods: run_model(), initialize(), run_step(), get_values, finalize(), update_rain(), read_tank_data(), and print_tank_data(). Each method is a Python function defined in a "def" block. Note that Python is dynamically typed, which makes the code more compact and easy to read.

#----------------------------------------------------------------

from numpy import zeros, random, double

from math import pi, sqrt

class water_tank:

"""Simulate the slow draining of a large water tank under

the influence of gravity."""

#------------------------------------------------------------

def run_model(self):

self.initialize()

for k in range(1,self.n_steps+1):

#print 'k =', k

self.run_step()

self.finalize()

#------------------------------------------------------------

def initialize(self):

self.g = 9.81 # [m/s^2]

self.tank_data_filename = 'tank_data.txt'

# Read tank settings from "tank_file"

self.read_tank_data()

self.volume = self.depth * self.top_area #[m^3]

self.out_area = pi * self.out_radius**2.0

self.print_tank_data()

# Open "rain_file" to read data

self.rain_file.open()

#------------------------------------------------------------

def run_step(self):

# Read next rainfall data entry...

self.update_rain()

rainrate_mps = self.rainrate / (3600.0 * 1000.0)

Q_in = rainrate_mps * self.top_area # [m^3/s]

if self.depth > 0:

Q_out = self.velocity * self.out_area # [m^3/s]

else:

Q_out = 0.0

dVol = (Q_in - Q_out) * self.dt

self.volume = max(self.volume + dVol, 0.0)

self.depth = (self.volume / self.top_area)

self.velocity = sqrt(2.0 * self.g * self.depth)

print 'depth =', self.depth, '[meters]'

# Write new depth to an output file ?

#------------------------------------------------------------

def get_value(self, value_code, time):

#----------------------------------------------------

# Call run_step() method as many times as necessary

# in order to compute the requested value at the

# requested time. Note that we do not override the

# value of n_steps from tank_data_file.

#----------------------------------------------------

n_steps = int(time / self.dt)

for k in range(n_steps):

self.run_step()

if value_code == 0: return self.depth

elif value_code == 1: return self.velocity

elif value_code == 2: return self.volume

elif value_code == 3: return self.rainrate

elif value_code == 4: return self.duration

#------------------------------------------------------------

def finalize(self):

print ' '

print 'Finished with water tank simulation.'

print 'Final depth =', self.depth

print ' '

self.rain_file.close()

# Close an output file ?

#------------------------------------------------------------

def update_rain(self):

if self.n_steps <= self.rain_file.n_lines:

record = self.rain_file.read_record()

self.rainrate = record[0]

self.duration = record[1]

else: self.rainrate = 0.0

#------------------------------------------------------------

def read_tank_data(self):

tank_file = input_file(self.tank_data_filename)

# What if tank_file doesn't exist ?

#-----------------------------------

tank_file.open()

self.dt = tank_file.read_value()

self.n_steps = tank_file.read_value(dtype='integer')

self.init_depth = tank_file.read_value()

self.top_area = tank_file.read_value()

self.out_radius = tank_file.read_value()

self.rain_data_filename = tank_file.read_value(dtype='string')

tank_file.close()

# These variables are computed from others

self.depth = self.init_depth.copy()

self.velocity = sqrt(2 * self.g * self.depth)

self.volume = self.depth * self.top_area #[m^3]

self.out_area = pi * self.out_radius**2.0

# Use "input_file" class to create rain_file object

self.rain_file = input_file(self.rain_data_filename)

#------------------------------------------------------------

def print_tank_data(self):

print 'dt =', self.dt

print 'n_steps =', self.n_steps

print 'init_depth =', self.init_depth

print 'top_area =', self.top_area

print 'out_radius =', self.out_radius

print 'depth =', self.depth

print 'velocity =', self.velocity

print 'volume =', self.volume

print 'out_area =', self.out_area

print 'rain_file =', self.rain_data_filename

print ' 'We can run this "tank model" at a Python command prompt by typing:

>>> tank_model = water_tank()

>>> tank_model.run_model()We could also define a function that contains these two lines. However, because of the initialize(), run_step() and finalize() methods, a caller can also use this model as part of a larger application. Notice that the "water_tank" class can have any number of additional methods for its own use, such as the ones here called "update_rain", "read_tank_data" and "print_tank_data". The "read_tank_data()" method reads data from a short input file. This file might include the following lines, for example:

dt = 1.0

n_steps = 20

init_depth = 2.0

top_area = 100.0

out_radius = 1.0

rain_file = rain_data.txtThe additional input file, "rain_data.txt", could contain the following lines:

90.0 1.0

100.0 1.0

110.0 1.0

120.0 1.0

130.0 1.0

140.0 1.0

150.0 1.0

160.0 1.0

170.0 1.0

180.0 1.0Notice, however, that this water tank model makes use of a class called "input_file" for reading both of these input files in the read_tank_data() method. Here is the Python code for this class, which has 8 methods (or member functions).

#----------------------------------------------------------------

class input_file:

"""Utility class to read data from a text file."""

#------------------------------------------------------------

def __init__(self, filename='data.txt'):

self.filename = filename

self.n_lines = 0

self.n_vals = 0

self.check_format()

self.count_lines() ######

#if self.n_lines == 0: self.count_lines() #########

#------------------------------------------------------------

def open(self):

try: f = open(self.filename, 'r')

except IOError, (errno, strerror):

print 'Could not find input file named:'

print self.filename

# print "I/O error(%s): %s" % (errno, strerror)

print ' '

return

#------------------------------------------------------------

# The approach here is called "delegation" vs. inheritance.

# It would also be possible (in Python 2.2 and higher) for

# our input_file class to inherit from Python's built-in

# "file" type. But that wouldn't necessarily be better.

# Type "Python, subclassing of built-in types" or

# "Python, how to subclass file" into a search engine for

# more information.

#------------------------------------------------------------

self.file = f # save the file object as an attribute

#------------------------------------------------------------

def check_format(self):

self.open()

record = self.read_record(format='words')

n_words = len(record)

#--------------------------------------

# Is first value a number or keyword ?

#--------------------------------------

try:

v = double(record[0])

format = 'numeric'

except ValueError:

format = 'unknown'

if n_words > 1:

if record[1] == "=":

format = 'key_value'

self.format = format

self.close()

#------------------------------------------------------------

def count_lines(self):

print 'Counting lines in file...'

self.open()

n_lines = 0

n_vals = 0

for line in self.file:

#-------------------------------------

# Note: len(line) == 1 for null lines

#-------------------------------------

if len(line.strip()) > 0:

n_lines += 1

words = line.split()

n_words = len(words)

n_vals = max(n_vals, n_words)

self.n_lines = n_lines

self.n_vals = n_vals

#--------------------------------------

# Initialize an array for reading data

#--------------------------------------

self.data = zeros([n_lines, n_vals], dtype='d')

self.data = self.data - 9999.0

self.close()

print ' Number of lines =', n_lines

print ' '

#------------------------------------------------------------

def read_record(self, format='not_set', dtype='double'):

# Should this be named "next_record" ?

if format == 'not_set': format=self.format

line = self.file.readline()

while len(line.strip()) == 0:

line = self.file.readline()

words = line.split()

n_words = len(words)

if format == 'numeric':

return map(double, words)

elif format == 'key_value':

key = words[0]

value = words[2]

if dtype == 'double': value = double(value)

if dtype == 'integer': value = int(value)

return [key, value]

elif format == 'words':

return words

#------------------------------------------------------------

def read_value(self, dtype='double'):

# Should this be named "next_value" ?

record = self.read_record(dtype=dtype)

if self.format == 'numeric':

return record[0]

elif self.format == 'key_value':

return record[1]

#------------------------------------------------------------

def read_all(self):

#--------------------------------------------

# Currently assumes all values are doubles,

# but read_record() and read_value() do not.

#--------------------------------------------

if self.n_lines == 0: self.count_lines()

self.open()

for row,line in enumerate(self.file):

record = self.read_record()

if self.format == 'numeric':

for col in range(len(record)):

self.data[row,col] = double(record[col])

elif self.format == 'key_value':

self.data[row,0] = double(record[1])

self.close()

#------------------------------------------------------------

def close(self):

self.file.close()What is Babel?

Babel is an open-source, language interoperability tool (and compiler) that automatically generates the "glue code" that is necessary in order for components written in different computer languages to communicate. It currently supports C, C++, Fortran (77, 90, 95 and 2003), Java and Python. Babel is much more than a "least common denominator" solution; it even enables passing of variables with data types that may not normally be supported by the target language (e.g. objects, complex numbers). Babel was designed to support scientific, high-performance computing and is one of the key tools in the CCA tool chain. It won an R&D 100 design award in 2006 for "The world's most rapid communication among many programming languages in a single application." It has been shown to outperform similar technologies such as CORBA and Microsoft's COM and .NET.

In order to create the glue code that is needed in order for two components written in different programming languages to "communicate" (or pass data between them), Babel only needs to know about the interfaces of the two components. It does not need any implementation details. Babel was therefore designed so that it can ingest a description of

an interface in either of two fairly "language neutral" forms, XML (eXtensible Markup Language) or SIDL (Scientific Interface Definition Language). The SIDL language (somewhat similar to the CORBA's IDL) was developed for the Babel project. Its sole purpose is to provide a concise description of a scientific software component interface. This interface description includes complete information about a component's interface, such as the data types of all arguments and return values for each of the component's methods (or member functions). SIDL has a complete set of fundamental data types to support scientific computing, from booleans to double precision complex numbers. It also supports more sophisticated data types such as enumerations, strings, objects, and dynamic multi-dimensional arrays. The syntax of SIDL is very similar to Java. A complete description of SIDL syntax and grammar can be found in "Appendix B: SIDL Grammar" in the Babel User's Guide. Complete details on how to represent a SIDL interface in XML are given in "Appendix C: Extensible Markup Language (XML)" of the same user's guide.

How to Manage a Project with Bocca

Bocca is a tool in the CCA tool chain that was designed to help you create, edit and manage a set of CCA components, ports, etc. that are associated with a particular project. As we will see in the next section, once you have prepared a set of CCA-compliant components and ports, you can then use a CCA-compliant framework like Ccaffeine to actually link components from this set together to create applications or composite models.

Bocca can be viewed as a development environment tool that allows application developers to perform rapid component prototyping while maintaining robust software- engineering practices suitable to HPC environments. Bocca provides project management and a comprehensive build environment for creating and managing applications composed of CCA components. Bocca operates in a language-agnostic way by automatically invoking the lower-level Babel tool. Bocca was designed to free users from mundane, low-level tasks so they can focus on the scientific aspects of their applications. Bocca can be used interactively at a Unix command prompt or within shell scripts. It currently does not have a graphical user interface. Bocca has only been around for about one year and is still in the alpha phase of development. Its current version number is 0.4.2 (Paris release, November 2007). A planned feature for Bocca in the very near future is the ability to create stand-alone executables for projects by automatically bundling all required libraries on a given platform. The key reference for Bocca is a paper by Elwasif et al. (2007), available in PDF format at: [8]

You can see examples of how to use Bocca in the set of tutorials called "A Hands-On Guide to the Common Component Architecture", written the CCA Forum members. That guide is available online at: [9]

There is not yet a user's guide for Bocca, but its help system allows you to get help on a particular topic by typing commands like:

bocca help <topic>and

bocca change <subject> --helpThe general pattern of a Bocca command is:

bocca [options] <verb> <subject> [suboptions] <target_name>The allowed verbs are listed below and can be applied to different "subjects" (or CCA entity classes). The currently supported "subjects" are also listed below. "Target names" are SIDL type names, such as mypkg.MyComponent. The following two lists describe the allowed verbs and subjects in version 0.4.2 (Paris release, Nov 2007).

List of Bocca Command Verbs

| create | Create a new Bocca entity based on the subject argument. |

|---|---|

| change | Change some existing project element(s). |

| config | |

| display | |

| edit | Open a text editor to edit the source code for the subject argument. |

| help | Get help on Bocca commands and syntax. |

| remove | Remove the given "subject" from the project. |

| rename | |

| update | |

| version | |

| whereis |

List of Bocca Command Subjects

application class component example interface package port project

How to Edit the Implementation Files for Components and Ports

While Bocca lets you focus on the interfaces and connectivity of components in a project (the application skeleton), at some point you need to provide an actual implementation for every component and port in your project. Bocca provides the preferred way to do this, which frees you from needing to know exactly where all of the implementation files are stored in the project's directory tree. Recall that Bocca manages the many files that Babel requires and produces, keeping track of each file's location so you don't need to do so. To edit the implementation file (source code) for a component, you can use the command:

> bocca edit component <component_name>Similarly, to edit the SIDL source code for a port, you can use:

> bocca edit port <port_name>This will open the corresponding implementation file with a text editor. (You can also replace the word "edit" with "whereis" in the above commands and Bocca will print out the path of the file that would be edited without doing anything.) If the environment variable BOCCA_EDITOR is set, Bocca will use that one, otherwise it uses EDITOR. Note that while vi, emacs and nedit support "+N" to specify the initial line number in the file to be opened, many other editors do not. For those that don't, you will need to search for the string "Insert-code-here" to move to sections that can be edited. The "nedit" program has a user-friendly, X11-based GUI and works on most Unix platforms, including Mac OS X. It is a full-featured text editor aimed at code developers and can be downloaded at no cost from: [10].

Of course, you can also find the file in the project's directory tree and open it for editing with any text editor of your choosing. The path to a component's implementation file from the top-level of your project folder will be something like:

components/myProject.myComponent/myProject/myComponent_Impl.pySimilarly the path to a port's (SIDL) implementation file will be something like:

ports/sidl/myProject.myPort.sidlWithin these files, you can search for the string "Insert-code-here" to find places where you can insert code and comments. Editing implementation files this way may be more frustrating than using Bocca, since the "bocca edit" approach automatically updates dependencies, that is, it regenerates all other source files that depend on the source file that is edited.

A Sample Bocca Script

Here is a shell script that uses Bocca commands to create a simple test project. This script only creates the "application skeleton" for the project; afterwards you will need to edit implementation files for each component and port as explained in the previous section. Another example of a Bocca script is given in Appendix C: "A Bocca Script to Define an OpenMI Port".

#! /bin/bash

# Use BOCCA to create a CCA test project.

#-----------------------

# Set necessary paths

#-----------------------

source $HOME/.bashrc

echo "==========================================="

echo " Building example CCA project with BOCCA "

echo "==========================================="

#--------------------------------------

# Create a new project with BOCCA and

# Python as the default language

#---------------------------------------

cd $HOME/Desktop

mkdir cca_ex2; cd cca_ex2

bocca create project myProject --language=python

cd myProject

#--------------------------------

# Create some ports with BOCCA

#--------------------------------

bocca create port InputPort

bocca create port vPort

bocca create port ChannelShapePort

bocca create port OutputPort

#----------------------------------------

# Create a Driver component with BOCCA

#---------------------------------------

bocca create component Driver \

--provides=gov.cca.ports.GoPort:run \

--uses=InputPort:input \

--uses=vPort:v \

--uses=OutputPort:output

#----------------------------------

# Create an Initialize component

#----------------------------------

bocca create component Initialize \

--provides=InputPort:input

#-----------------------------------------------

# Create two components that compute velocity

#-----------------------------------------------

bocca create component ManningVelocity \

--provides=vPort:v \

--uses=ChannelShapePort:shape

bocca create component LawOfWallVelocity \

--provides=vPort:v \

--uses=ChannelShapePort:shape

#------------------------------------------------

# Create some channel cross-section components

#------------------------------------------------

bocca create component TrapezoidShape \

--provides=ChannelShapePort:shape

bocca create component HalfCircleShape \

--provides=ChannelShapePort:shape

#-------------------------------------

# Create a Finalize component

#-------------------------------------

bocca create component Finalize \

--provides=OutputPort:output

#--------------------------------------

# Configure and make the new project

#--------------------------------------

./configure; makeHow to Link CCA Components with Ccaffeine

As explained in Section ***, Ccaffeine is only one of many CCA-compliant frameworks for linking components, but it is the one that is used the most. There are at least three ways to use Ccaffeine, (1) with a GUI, (2) at an interactive command prompt or (3) with a "Ccaffeine script". The GUI is especially helpful for new users and for demonstrations and simple prototypes, while scripting is often faster for programmers and provides them with greater flexibility.

Ccaffeine is the standard CCA framework that supports parallel computing. Three distinct “Ccaffeine executables” are available, namely:

- Ccafe-client = a client version that expects to connect to a multiplexer front end which can then be connected to the Ccaffeine-GUI or a plain command line interface.

- Ccafe-single = a single-process, interactive version useful for debugging

- Ccafe-batch = a batch version that has no need of a front end and no interactive ability

These executables make use of “Ccaffeine resource files” that have “rc” in the filename (e.g. test-gui-rc). The Ccaffeine Muxer is a central multiplexor that creates a single multiplexed communication stream (back to the GUI) out of the many cafe-client streams. For more information, see the online manual for Ccaffeine at: [11]

Linking Components with the Ccaffeine GUI

Assuming that you have successfully installed the CCA tool chain on your computer (including Ccaffeine and Bocca) and that you have used Bocca to create a project (with some components and ports), starting the GUI is easy. You simply run a shell script that has been created for you automatically by Bocca and placed in your project's "util" folder. That is, if you're at the top level of your CCA project directory, you just type:

> utils/run-gui.shThis shell script calls two other shell scripts called gui-backend.sh and gui.sh. Note that Ccaffeine and its GUI are run as two separate processes, which makes it possible to run the GUI process locally and the Ccaffeine process on a remote server. You can learn how to do this from the Ccaffeine User's Manual (see URL above).

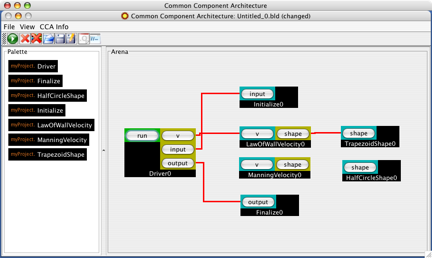

The Ccaffeine GUI is pretty easy to use. The "palette" of available components is shown in the left-hand side panel. You click and drag to move a component from the palette into the "arena" on the right. This causes the component to be "instantiated" in the framework and you will then be prompted for an "instance name". You can drag to move the component boxes wherever you want them. Component boxes will contain one or more little "port buttons" that are labeled with a port name and the instance name at the bottom. Any two components that have the same port can be connected by clicking first on the "port button" of one and then the same-named "port button" of the other one. A red line drawn between the two "port buttons" indicates that the components are connected. You can disconnect two components by reversing the connection procedure. Once all components have been connected, you can run the resulting application by clicking on a "go" or "run" button (port), which will typically be on the "driver" component. Figure 1 shows a simple example of a "wiring diagram" created with the GUI.

Figure 1. A “wiring diagram” for a simple CCA project. The CCA framework called Ccaffeine provides a “visual programming” GUI for linking components to create working applications.

Linking Components with an Interactive Command Line

You can run Ccaffeine scripting commands at an interactive command line by launching the command line interface with:

> ccafe-single

cca> helpSee the next section for more information.

Linking Components with Scripts (in rc files)

You save Ccaffeine scripts in "rc files" (Ccaffeine resource files) and then use the CCA tool called ccafe-single to run them at a Unix command prompt, as in:

> ccafe-single --ccafe-rc <path-to-rc-file> >& task0.outCcaffeine scripts, generated by either Bocca or the Ccaffeine GUI, are stored in a CCA project folder within the "components/tests" folder.

Things to Remember

- Always start your Ccaffeine script with the "magic line": #!ccaffeine bootstrap file.

- Always end your Ccaffeine script with the quit command

- The path is where Ccaffeine is to look for CCA components (in .cca files, which point to libraries that comprise the component).

- The palette is the collection of available components.

- The arena is where components are instantiated and connected.

- <class_name> is set in the SIDL file where the component is defined.

- <instance_name> is chosen by the user but must be unique in the arena.

List of Ccaffeine Script Commands

Ccaffeine scripts only contain a limited number of commands and the command names are intuitive. The main ones are described here.

Connect the ports of two components:

connect <user_component> <user_port> <provider_component> <provider_port>Disconnect ports of two components:

disconnect <user_component> <user_port> <provider_component> <provider_port>Detail the connections between components:

display chainShow what is currently in the arena:

display arenaList the uses and provides ports the component has registered:

display component <component_instance_name>Show what is currently in the palette:

display paletteStart the application of linked components (equivalent to "run"):

go <component_instance_name> <port_name>List the contents of the arena:

instancesAdd a component instance to the arena:

instantiate <class_name> <instance_name>List the contents of the palette:

palettePrint the current (Ccaffeine) path:

pathAdd a directory to the end of the current path:

path appendSet the path from the value of the $CCA_COMPONENT_PATH environment variable:

path initAdd a directory to the beginning of the current path:

path prependSet the path to the value provided:

path set <path_name>Terminate framework & return to the shell prompt:

quit (or exit or bye or x)Remove a component from the arena:

remove <component_instance_name>Add a component to the palette:

repository get-global <class_name>A Sample Ccaffeine Script (in an rc file, components/tests/test_rc)

#!ccaffeine bootstrap file.

# ------- do not change anything ABOVE this line.-------------

path set /Users/peckhams/Desktop/cca_ex2/myProject/components/lib

repository get-global myProject.Driver

instantiate myProject.Driver myProjectDriver

display component myProjectDriver

repository get-global myProject.Finalize

instantiate myProject.Finalize myProjectFinalize

display component myProjectFinalize

repository get-global myProject.HalfCircleShape

instantiate myProject.HalfCircleShape myProjectHalfCircleShape

display component myProjectHalfCircleShape

repository get-global myProject.Initialize

instantiate myProject.Initialize myProjectInitialize

display component myProjectInitialize

repository get-global myProject.LawOfWallVelocity

instantiate myProject.LawOfWallVelocity myProjectLawOfWallVelocity

display component myProjectLawOfWallVelocity

repository get-global myProject.ManningVelocity

instantiate myProject.ManningVelocity myProjectManningVelocity

display component myProjectManningVelocity

repository get-global myProject.TrapezoidShape

instantiate myProject.TrapezoidShape myProjectTrapezoidShape

display component myProjectTrapezoidShape

#########################################

# Add a more complete example later.

#########################################What is OpenMI?

The OpenMI project is (HISTORY, WHO, GOALS, FUNDING, open-source, etc.) *********

OpenMI consists of two main parts. First and foremost, it is an interface standard which transcends any particular language or operating system. (Recall the definition of an interface as a specific collection of methods from section ***.) However, it also comes with a software development kit (or SDK) that contains a large number of tools or utilities that are needed in order to implement the OpenMI interface. Both the interface and the SDK are available in C# and Java. (But the Java version of OpenMI 1.4 only became available in September 2008 and is still being tested.) The C# version is intended for use in Microsoft's .NET framework on a PC running Windows.

OpenMI is a request-reply system where one component makes a detailed request of another component that is able to provide something that it needs to perform a computation. This request is very detailed (you could even say, demanding) in that it specifies the where, when, what and how regarding the data that it needs. For example, "where" could specify a particular subset of elements in the computational grid (e.g. a watershed or a coastline), "when" could be some future time, "what" could be the particular computed quantity that is to be returned and "how" could specify a data operation, such as a spatial or temporal interpolation scheme. If the other component is using different units or a different computational grid or has a different dimensionality, it must do whatever is necessary to return the requested data in the form that the calling component needs. This is where it often needs to tap into the large set of supporting tools in OpenMI's SDK.

OpenMI has some fairly intuitive and well-thought-out terminology:

- Engine = computational core of a model

- Model = Engine + Data (an engine populated with specific input data, usually for some real place, e.g. a model for the discharge of the Rhine River)

- Element Set = the set of locations (computational elements) for which return values are needed

- Exchange Items = the actual quantities (e.g. wave height, wave angle) that are passed between two components, either as input or output.

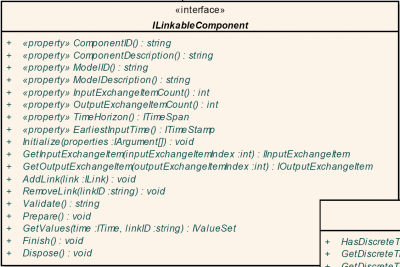

The UML class diagram for OpenMI is fairly complicated and is given in Appendix B: "UML Class Diagram for OpenMI". However, the ILinkableComponent is the primary interface, with the remainder of the diagram showing the subinterfaces that it depends on. Figure 2 shows the box for the ILinkableComponent interface. The interface contains "getter methods" for the eight properties (member data) listed at the top of the figure, as well as ten different methods (member functions). Note that there are separate Initialize(), Prepare() and Validate() methods, a GetValues() method, and separate Finish() and Dispose() methods. This is a refinement of the simple "IRF interface" discussed previously, designed to support complex requests between components. There are also two "self-describing" methods that a caller can use to get descriptions of all the input and output exchange items that a component can use or provide. The caller uses the InputExchangeItemCount and OutputExchangeItemCount fields (via their getter) to initialize a loop that repeatedly calls the GetInputExchangeItem() or GetOutputExchangeItem() method. In OpenMI, an application called the Configuration Editor (part of the SDK) is used to graphically link components into applications, similar to CCA's CCaffeine GUI. The Configuration Editor can use the methods just described to populate droplists which are then presented to a user so that they can manually "pair off" the inputs and outputs of two components.

Figure 2. UML class diagram for OpenMI's ILinkableComponent interface.

OpenMI has defined a type of file called an OMI file that a "framework" (like the OpenMI Configuration Editor) can use to locate a library file (e.g. DLL) that implements the ILinkableComponent interface for a model engine as well as associated input data. OMI files are XML files with a predefined XSD (XML Schema Definition) format which contain information about the class to instantiate, the assembly hosting the class (under .NET) and any arguments needed by the Initialize() method (e.g. input files). For more information on OMI files and their XSD, see Gijsbers and Gregersen (2007).

More information on OpenMI can be found in the papers by Gregersen et al. (2007), Gijsbers and Gregersen (2007) and in numerous online documents at the OpenMI Main Page, OpenMI Wiki, and SourceForge

Where Can I Get Some Components?

CSDMS staff members are currently working on an automated wrapping tool that will convert a model with a simple IRF interface (see Section ***) and appropriate metadata for all "exchange items" into an OpenMI-compliant component. A Bocca script for creating an "OpenMI port" for use in a CCA project is given in Appendix C.

The following groups and projects are working on producing components that can be used in a CCA-compliant framework: PETSc, hypre, Taos, ITAPS, etc. (see previous section on CCA)

The Object Modeling System is another component-based, open-source project sponsored by the US Department of Agriculture (USDA) that has a number of Java and Fortran components that have an IRF interface (but with the method names initialize(), execute() and cleanup().) Most of them relate to hydrologic processes or are utilities and should be fairly straight-forward to convert to OpenMI components.

The GEOTOP project, an open-source modeling effort based in Italy, has also developed a large number of hydrologic modeling components in Java. They have been working with OpenMI and expect to generate OpenMI-compliant components. Deltares, DHI (Danish Hydraulics Institute) and Wallingford Software are three large hydrologic modeling companies in Europe that are participating in the OpenMI project. OpenMI "wrappers" for many of their (commercial) models are available, or soon will be. Other software projects such as Visual MODFLOW are starting to convert other models to OpenMI components. As the OpenMI interface standard grows in popularity, it is expected that more components will become available.

How to Convert IDL Code to Numerical Python

IDL (Interactive Data Language) is an array-based programming language that is sold by ITT Visual Information Solutions. IDL, Matlab and other commercially-sold languages are not open-source and are not supported by Babel. However, there is an open-source IDL to Python converter called i2py. The current version is an alpha release, but the CSDMS team has extended it to the point where it can convert the computational engine of most models that are written in IDL.

The following steps assume that you have Python installed on your computer. If you don't, you can download Python from [12], as well as the "numpy" and "matplotlib" modules. Python itself and most modules written for it are open-source.

- Download the I2PY tool from the CSDMS website.

- Copy the i2py-0.2.0 folder to your desktop.